Performance Testing with Gatling

Being high throughput & low latency is one of the important competitive advantage for a digital product experience.

For API services, high throughput is the capability to handle large requests at a time and low latency is about how fast to process the requests & return back.

JMeter is widely known for performance testing use. Tons of tutorials & guidelines available online to help with. Its GUI driven make it easy & quick to configure a test. The test plan can be export to jmxfile and shared around the team after.

However, it can be challenging as it’s not quite straightforward to write a scenario-based performance testing in JMeter.

Scenario-based testing is a user journey simulation which usually involve multiple sequential API calls to complete.

We explored Gatling as alternative tool that support scenario-based performance testing to help us understand how the system react upon receiving high traffic load for an upcoming marketing campaign.

Key features in Gatling that we found useful,

- Code based, developer friendly with handful of load testing methods utility

- Externalize test data file & randomize feed

- Externalize request body file with parameters expression

- Built with Netty, low memory overhead when running with high HTTP threads in local machine

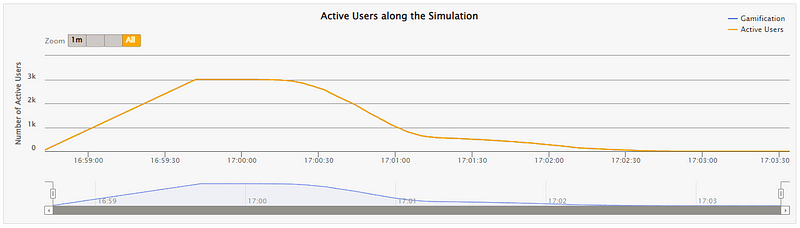

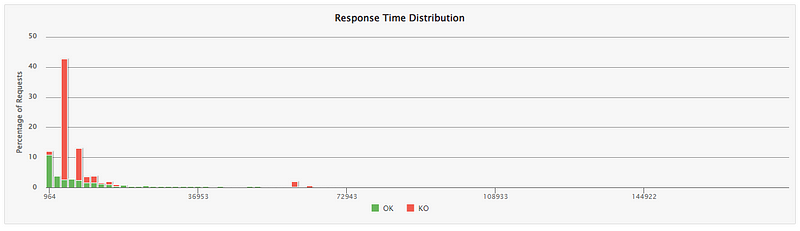

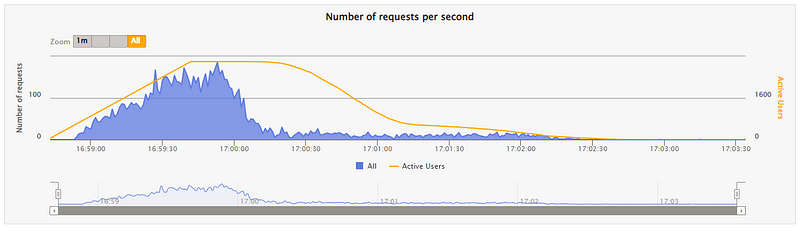

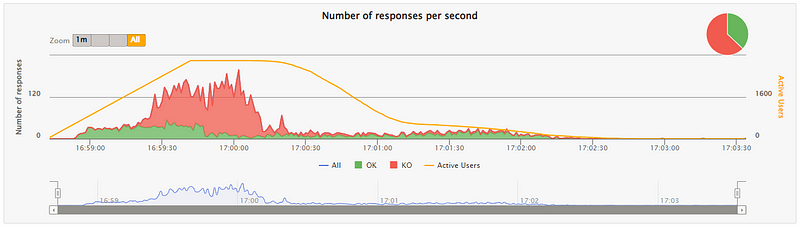

Example test result report,

Common causes of poor API response,

- Dependency to blocking I/O calls (database, external http)

- High CPU & memory overhead (e.g. due programmatic inefficiency)

High level approach to cope with this stress,

- Vertical scaling — Increase request max threads, then increase I/O call time-out to wait a little longer rather than failing sooner, then scale up CPU & memory to hold the weight.

- Horizontal scaling — Spread the application instances number as wide enough to distribute the traffic & processing load.

Both are typical infra scaling.

For application, it’s an opportunity to review & discover better approach at system design level. Few good ideas can be adopted to improve responsiveness from event-driven design, reactive systems principle, etc.

Performance testing is critical and should be key discussion point with the team early enough during system design activity.